Execute Playbooks with Webhook

As an alternative to setting up playbooks to run on a schedule, you can schedule a playbook to run when an external application, such as Splunk, makes a POST request to a Webhook URL.

You can also supply additional data in a request body when making requests to the Webhook address. The playbook then has access to the supplied data through the loadEventsFromExecutionContext() operator.

Note

By default, stream executions are limited to 5 requests in each 5-minute interval. If more requests are generated in that timeframe, they are queued and could be coalesced into fewer requests. You can reconfigure the limits, but if you do, be aware of the additional load that can result from increased numbers of stream executions. If Webhook requests fire too rapidly, they are subject to a rate limit and coalescing process.

Set Up a Stream for a Webhook Trigger

To set up a playbook for a Webhook trigger:

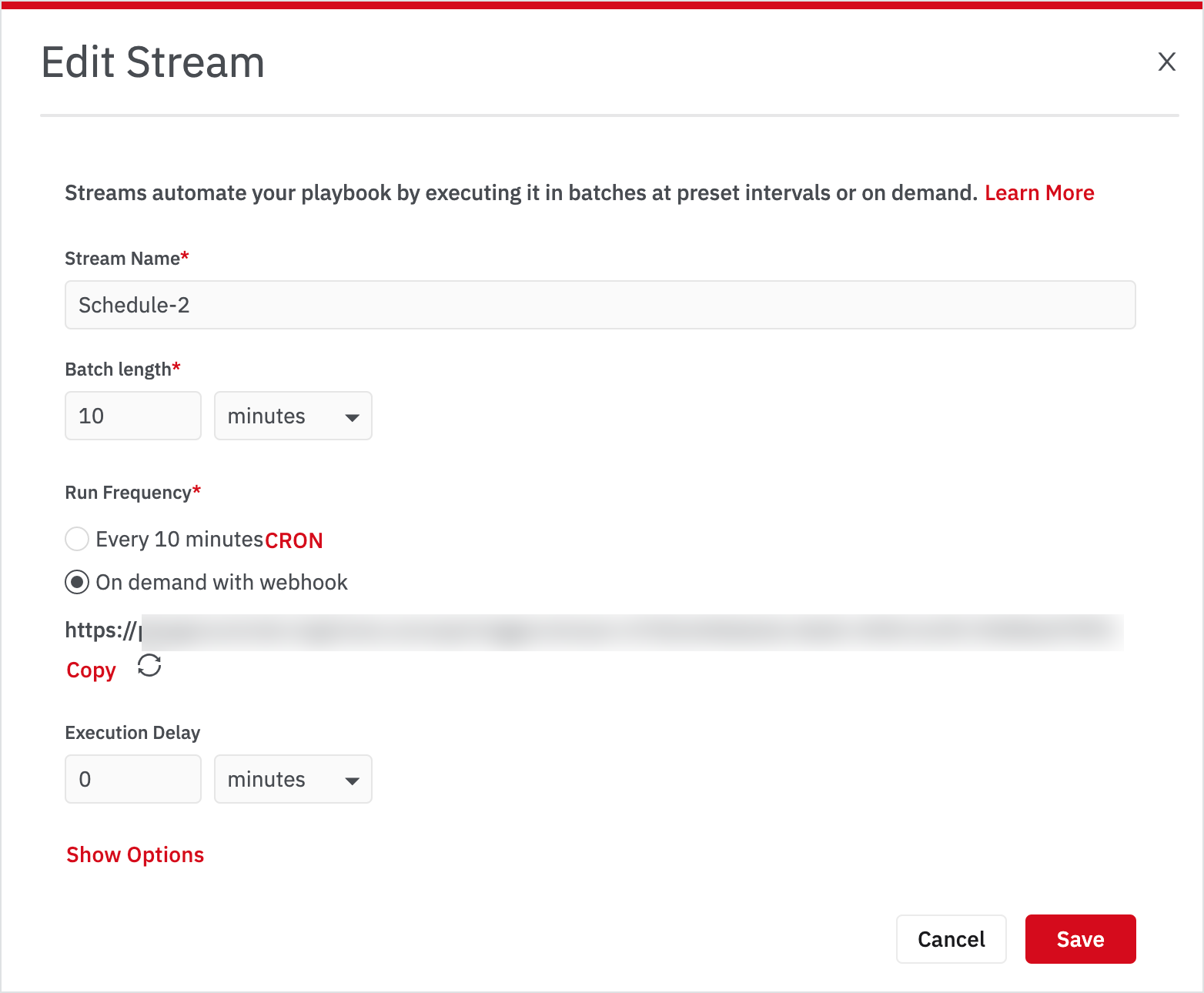

- Edit an existing stream.

- On the Edit Stream page, edit the stream name and batch length and select the On demand with webhook option.

- The Webhook URL will be displayed. Copy the URL and save it.

- Click Save.

The Webhook URL is now available for use. To execute the stream, a POST call must be made to the Webhook URL. The following is an example curl command that includes a POST call to the Webhook URL.

curl -X POST \

"https:/xyz.logichub.com/api/trigger/stream-5784/f0e58257-b358-4986-ae4c-38d39b7d5fe3" \

-H "content-type: application/json" \

-d '{}'

The command output includes a request ID.

{

"requestId": "7aa3c4a7-d21e-4ab4-8233-740e1a367252"

}

The request ID can be used to fetch the state of the stream trigger using the following API call.

curl -X GET \

https://xyz.logichub.com/api/trigger/stream/request/27f5f7ee-74ca-42e4-a226-81ecc0580fe2 \

-H 'Content-Type: application/json' \

-H 'X-Auth-Token: <api token>'

The following is an example response when the stream is scheduled and batch is created.

{

"result": {

"requestId": "27f5f7ee-74ca-42e4-a226-81ecc0580fe2",

"expiryAt": 1569149800083,

"createdAt": 1569148300083,

"batchId":"batch-1822643",

"state": "scheduled"

}

}

The following is an example response when the stream is queued.

{

"result": {

"requestId": "27f5f7ee-74ca-42e4-a226-81ecc0580fe2",

"expiryAt": 1569149800083,

"createdAt": 1569148300083,

"state": "queued"

}

}

If the stream cannot be scheduled due to the expiration limit, the stream is timed out (timedout). In this case, the client must retry to trigger the stream.

{

"result": {

"requestId": "27f5f7ee-74ca-42e4-a226-81ecc0580fe2",

"expiryAt": 1569149800083,

"createdAt": 1569148300083,

"state": "timedout"

}

}

When the stream is scheduled, the following API call can be used to see the stream execution status. Use the batchId from the previous stream request API response.

curl -X GET \

'https://xyz.logichub.com/api/batch/batch-1822939/results?pageSize=10&after=0' \

-H 'Content-Type: application/json' \

-H 'X-Auth-Token: <api token>'

The following is a sample response.

{

"result":{

"status":"executing",

"totalSize":0,

"data":[

]

}

}

When stream execution results are available, the status transitions to ready. The following is an example with the ready status.

curl -X GET 'https://xyz.logichub.com/api/batch/batch-1822939/results?pageSize=10&after=0'

-H 'Content-Type: application/json'

-H 'X-Auth-Token: <api token>'

{

"result":{

"status":"ready",

"totalSize":30,

"data":[

{

"id":"correlation-eyJub2RlS2V5Ijp7ImZsb3dJZCI6ImZsb3ctNDUwNDQiLCJub2RlSWQiOiIwMTg5MWUzNS0yMzM5LTRhZTctOGY5Mi05NmYzN2RhYjQ4MTIifSwiZmxvd1ZlcnNpb24iOnsidmVyc2lvbiI6NTd9LCJsaHViSWQiOiIxIiwiaW50ZXJ2YWwiOnsiZnJvbSI6MTU2OTE1MzExNTgxOCwidG8iOjE1NjkxNTQ5MTgyMjh9fQ==",

"schema":{

"columns":[

{

"name":"lhub_id",

"dataType":"string",

"visible":false,

"semanticType":"other"

},

{

"name":"exit_code",

"dataType":"int",

"visible":true,

"semanticType":"other"

},

{

"name":"result",

"dataType":"string",

"visible":true,

"semanticType":"other"

},

{

"name":"stdout",

"dataType":"string",

"visible":true,

"semanticType":"other"

},

{

"name":"stderr",

"dataType":"string",

"visible":true,

"semanticType":"other"

},

{

"name":"1",

"dataType":"int",

"visible":true,

"semanticType":"other"

},

{

"name":"lhub_page_num",

"dataType":"bigint",

"visible":false,

"semanticType":"other"

}

]

},

"columns":{

"stdout":"",

"result":"{\"error\": null, \"has_error\": false, \"result\": {\"customer_id\": \"ALFKI\"}}",

"exit_code":"0",

"lhub_page_num":"0",

"1":"1",

"stderr":"",

"lhub_id":"TableNameWithLhubId(`queryCustomers`,1)"

}

}

]

}

}

To obtain the remaining results, fetch the next pages.

curl -X GET 'https://xyz.logichub.com/api/batch/batch-1822939/results?pageSize=10&after=10'

-H 'Content-Type: application/json'

-H 'X-Auth-Token: <api token>'

The following table lists the status values.

| Status | Description |

|---|---|

| scheduled | A batch is scheduled for execution. |

| queued | A batch is queued for execution but has not started executing yet. |

| executing | The playbook is executing for this batch. |

| ready | Correlations have been computed and results are available. |

| error | An error occurred during any step. |

| skipped | An error occurred during any step. |

| expired-or-invalid-batch | batch results are garbage collected or removed or the batch doesn’t exist. |

Query Parameters to the Webhook

The optional intervalStart and intervalEnd parameters provide the time range for stream execution. The following rules apply to the time range settings.

intervalStartandintervalEndtimes must be given in Unix epoch time in milliseconds.- If both a start and end time are provided, both are used.

- If only a start time is provided, the end time is the start time plus the batch Interval time.

- If only the end time is provided, the start time is the end time minus the batch Interval time.

- If no start or end time is provided, the start time is the current time minus the batch Interval time and the end time is the current time.

Note

Batch interval refers to batch length.

curl -XPOST --header "Content-Type: application/json" -d '{}' https://xyz.logichub.com/api/trigger/stream-5234/717e995a-6fdd-42b7-a2c2-ce3e9e613d63?intervalStart=1570468818221&intervalEnd=1570468848221

Supplying Additional Data to the Webhook

To supply additional data to the Webhook, edit the playbook. Insert a step with the operator called loadEventsFromExecutionContext().

The operator’s argument is a parent table that is used with Webhook execution and provides a body JSON with additional data. The JSON code from the parent table can help test the playbook while authoring without needing to set up a running stream. It can also provide additional data to the playbook if the playbook itself does not contain all of the desired data.

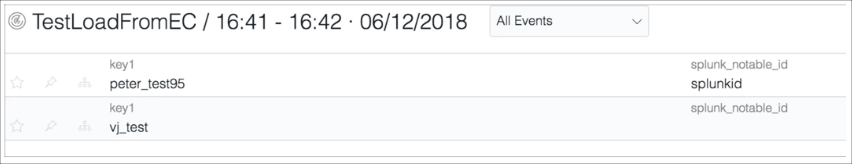

When the playbook runs using Webhook functionality, the output of loadEventsFromExecutionContext() contains the body parameters that are sent using the Webhook. The parameters are formatted as follows with the resulting table as shown below.

curl -XPOST --header "Content-Type: application/json" -d '{"rows": [{"key1": "peter_test95", "splunk_notable_id":"splunkid", "lhub_id": "10"}, {"key1": "vj_test", "lhub_id": "11"}]}' https://xyz.logichub.com/api/trigger/stream-5234/717e995a-6fdd-42b7-a2c2-ce3e9e613d63

If the provided body contains the key rows with the values as an array of key-value pairs, then the loadEventsFromExecutionContext() operator parses the data and formats it into a table as follows.

If the provided body isn’t in this format, the loadEventsFromExecutionContext() operator loads the provided body contents as a string in its raw structure.

{

"rows":[

{

"key1":"peter_test95",

"splunk_notable_id":"splunkid",

"lhub_id":"10"

},

}

Stream Requests Coalescing/Merging

Rate Limits on On Demand Streams

For system stability, there is a rate limiter in place. If the requests exceed the rate limit, the requests are queued further.

By default, five requests can be invoked within a period of 5 minutes. These requests are controlled by these properties.

lhub.stream.rateLimitterPermits=5 // 5 requests

lhub.stream.rateLimitterRefillTime=300000 // 5 minutes

If there are more requests which exceed what is specified by the above properties, then these will be queued. By default, the queue length is 20. Following property controls the queue length.

lhub.stream.rateLimitterQueueSize=20 // queue length

If the queue is full, further requests will be rejected. The webhook API will return an HTTP error "TOO_MANY_REQUESTS" with HTTP status code "429".

The queue is drained at the same rate as specified by the rate limit configuration that is, by default five requests in 5 minutes interval. There is another optimization that kicks in for the queued requests; queued requests can be merged into fewer requests as explained in the section below.

Note

These limits are global for all on demand streams.

This queue is an in-memory queue and is designed to absorb occasional spikes. Therefore, any LogicHub application restart will lose the queue data. If requests take less time to be processed then a larger queue will be fine, else you can increase the parallelism.

Merging/Coalescing of Stream Requests

Coalescing of request is an optimization that works in the following cases:

- There are many requests in a short span of time

- Only one execution of stream can handle all the required logic of all incoming requests

All requests that are queued are candidates for merging when they are picked from the queue.

Requests will be merged only if they satisfy the following criteria:

- Requests are from the same stream

- Requests have the same parameter (on demand stream can take params as well)

- Time interval gap is less than an hour. Refer to

(lhub.stream.rateLimitterMaxTimeRangeGap=3600000)

The following requests will merge:

| stream-1 | {field1: "value1", "field2": "value2"} | 12pm | 14pm |

| stream-1 | {field1: "value1", "field2": "value2"} | 13pm | 15pm |

Merged request

| StreamID | Input Params | Start Time | End Time |

|---|---|---|---|

| stream-1 | {field1: "value1", "field2": "value2"} | 12pm | 15pm |

Turn Off Merging/Coalesce of Requests

Coalescing of request is an optimization that works well in the following cases:

- There are many requests in a short span of time

- Only one execution of stream can handle all the required logic of all incoming requests

If your on demand streams do not work due to coalescing into fewer requests, you can turn off coalescing by setting the following properties:

- Either Set Queue sIze parameter to 1

lhub.stream.rateLimitterQueueSize=1

Or, set the time interval property to 0

lhub.stream.rateLimitterMaxTimeRangeGap=0

There may be a slight performance penalty, as the requests that could have been merged will now run independently.

Recommendations in Case Merging/Coalesce of Requests is Turned Off

-

First turn off merging by following

lhub.stream.rateLimitterMaxTimeRangeGap=0 -

Find out your concurrency

execution_time = Average Batch Execution Time (in minutes)

n = Number of webhook requests per minute

concurrency = n * execution_time -

Change following concurrency properties

a. Increase your parallel webhook requests run count

lhub.stream.rateLimitterPermits= concurrencyb. Change refill time to average batch execution time

lhub.stream.rateLimitterRefillTime = execution_time (in milliseconds)

Note

These numbers may require tweaking, based on deployment size and variations in execution time.

For example, if most requests executes under 5 minutes and requests are at steady rate (or have occasional spikes )

lhub.stream.rateLimitterRefillTime=360000 // 6 minutes

-

Change queue size

If rate limit is exceeded requests are queued, queue size can be controlled via

lhub.stream.rateLimitterQueueSize=20This is useful in cases when there are occasional spikes. Requests are rejected if the queue is full.

The webhook API will return HTTP error "TOO_MANY_REQUESTS" with HTTP status code "429".

Note

This queue is an in-memory queue and is designed to absorb occasional spikes. Therefore, any LogicHub application restart will lose the queue data. If requests take less time to process, then a larger queue would be fine. Otherwise, you can increase the parallelism.

Properties Reference

// Stream rate limiter uses token bucket policy, this config controls the time after which tokens will be refilled.

// Changing this property will require a restart of the service.

// Default vaule is 5 minutes

lhub.stream.rateLimitterRefillTime=300000

// This controls the number of tokens in stream rate limiter.

// Default vaule is 5

lhub.stream.rateLimitterPermits=5

// This controls how many requests can be queued after all tokens are consumed.

// Changing this property will require a restart of the service

// Default vaule is 20

lhub.stream.rateLimitterQueueSize=20

// This controls coalescing of stream invocations, if gap between two intervals of same stream invocations is less than this time.

// Then they are potential candidate of being coalesced.

// Default vaule is 1 hour

lhub.stream.rateLimitterMaxTimeRangeGap=3600000

Updated over 2 years ago