AWS CloudWatch Logs

Version: 2.0.0

CloudWatch Logs enables you to centralize the logs from all of your systems, applications, and AWS services that you use, in a single, highly scalable service.

Connect Amazon CloudWatch Logs with LogicHub

- Navigate to Automations > Integrations.

- Search for Amazon CloudWatch Logs.

- Click Details, then the + icon. Enter the required information in the following fields.

- Label: Enter a connection name.

- Reference Values: Define variables here to templatize integration connections and actions. For example, you can use https://www.{{hostname}}.com where, hostname is a variable defined in this input. For more information on how to add data, see 'Add Data' Input Type for Integrations.

- Verify SSL: Select option to verify connecting server's SSL certificate (Default is Verify SSL Certificate).

- Remote Agent: Run this integration using the LogicHub Remote Agent.

- Region Name: Valid AWS Region Name. (To get a list of available regions, refer to this link).

- Access Key: AWS Account Access Key.

- Secret Key: AWS Account Secret Key.

- After you've entered all the details, click Connect.

Actions for Amazon CloudWatchLogs

Get Log Events

Lists log events from the specified log stream.

Input Field

Choose a connection that you have previously created and then fill in the necessary information in the following input fields to complete the connection.

| Input Name | Description | Required |

|---|---|---|

| Log Group Column name | Column name from parent table holding value for Log Group. | Required |

| Log Stream Column name | Column name from parent table holding value for Log Stream. | Required |

| Start Time Column name | Column name from parent table holding value for Start Time, expressed as epoch seconds (default is Batch start time). Events with a timestamp equal to this time or later than this time are included. | Optional |

| End Time Column name | Column name from parent table holding value for End Time, expressed as epoch seconds (default is Batch end time). Events with a timestamp later than this time are not returned. | Optional |

| Limit Column name | Column name from parent table holding value for Limit. The maximum number of events to return (default is 1,000 events). | Optional |

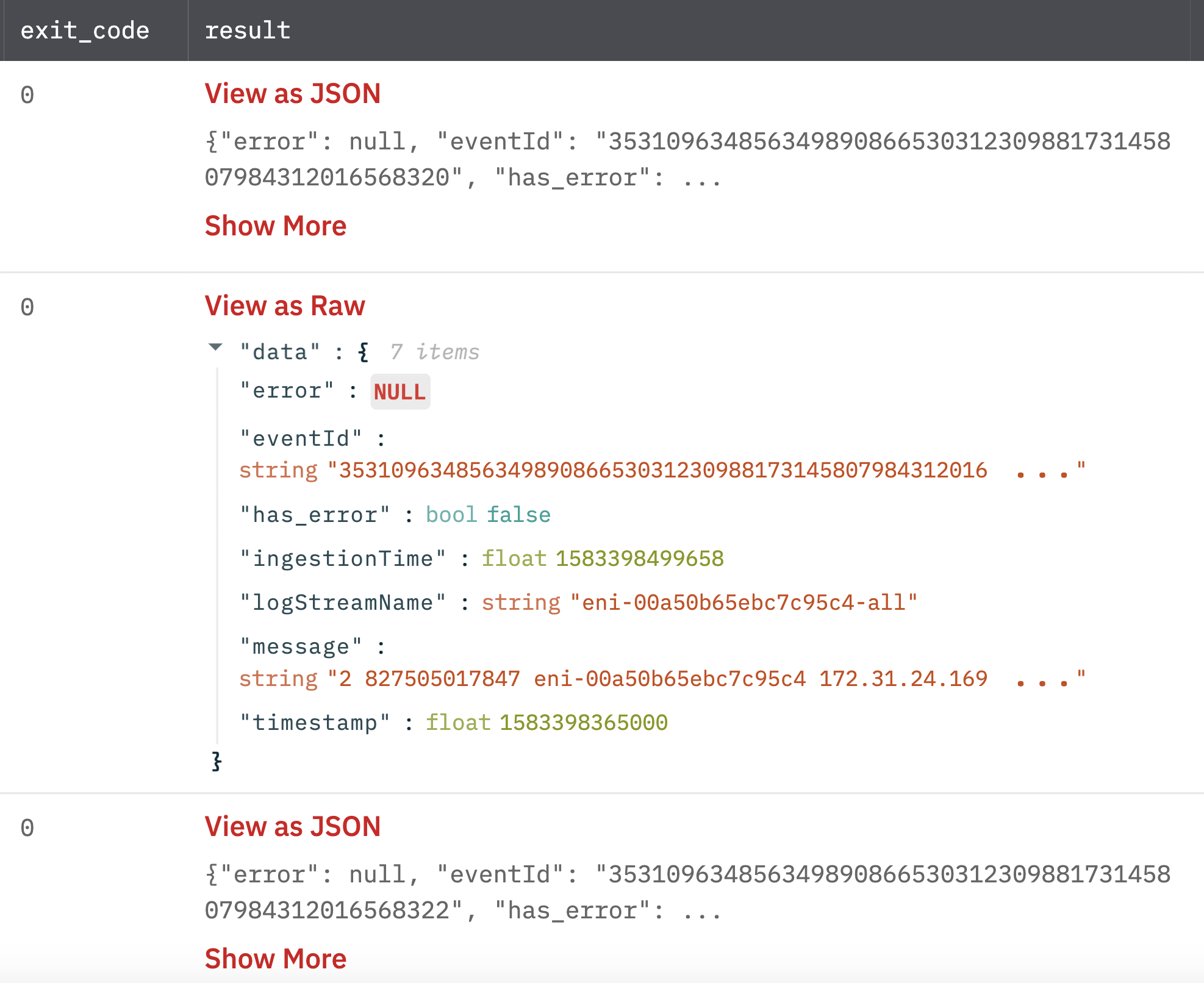

Output

A JSON object containing multiple rows of result:

- has_error: True/False

- error: message/null

- result: List of dictionaries in which each dict describes a log event.

Filter Log Events

Lists log events from the specified log group.

Input Field

Choose a connection that you have previously created and then fill in the necessary information in the following input fields to complete the connection.

| Input Name | Description | Required |

|---|---|---|

| Log Group Column name | Column name from parent table holding value for Log Group. | Required |

| Log Streams | Jinja Template holding value for Log Streams (Default is all streams). Example: {{stream1}},{{stream2}}. | Optional |

| Log Stream Prefix Column name | Column name from parent table holding value for Log Stream Prefix (default is all streams). | Optional |

| Start Time Column name | Column name from parent table holding value for Start Time, expressed as epoch seconds (default is Batch start time). Events with a timestamp equal to this time or later than this time are included. | Optional |

| End Time Column name | Column name from parent table holding value for End Time, expressed as epoch seconds (default is Batch end time). Events with a timestamp later than this time are not returned. | Optional |

| Filter Pattern | Jinja Template holding value for Filter Pattern (Default is no filter). Example: {{msg1}} {{msg2}}. For more information, see Filter and Pattern Syntax. | Optional |

| Limit Column name | Column name from parent table holding value for Limit. The maximum number of events to return (default is 1,000 events). | Optional |

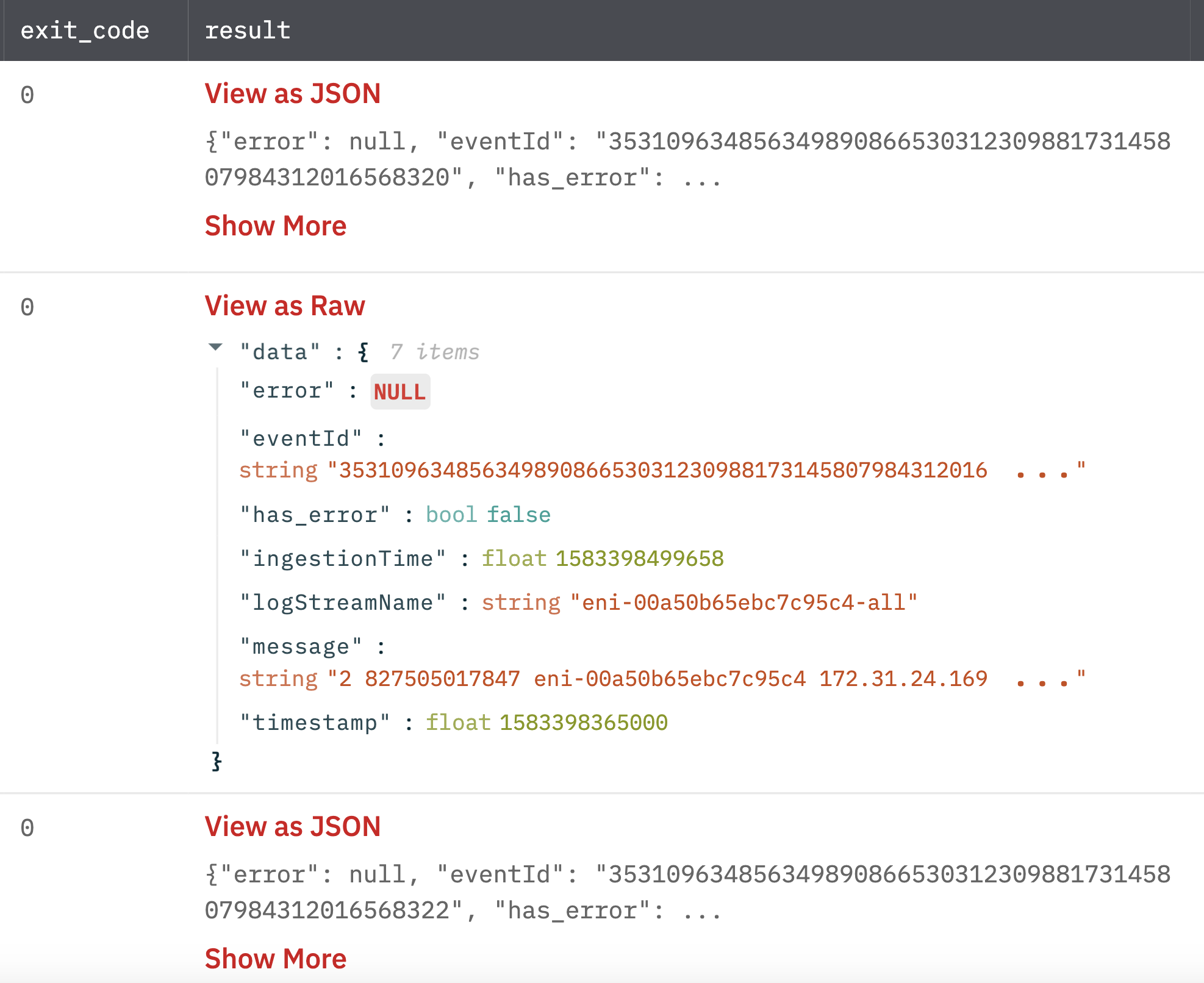

Output

A JSON object containing multiple rows of result:

- has_error: True/False

- error: message/null

- result: List of dictionaries in which each dict describes a log event.

Release Notes

v2.0.0- Updated architecture to support IO via filesystem

Updated over 2 years ago