LogicHub Training

Welcome to LogicHub’s self-paced training program. In this program, we provide materials that you can walk through at your own pace. After finishing this program, you will be equipped to develop basic playbooks with the LogicHub platform, and enable them to run in production.

It is structured as follows:

-

A 90-minute video that broadly walk through the key areas of the product

-

A study guide that can be read cover-to-cover to gain an overall understanding of the product.

-

And we thought the best way to learn to do it, is to do it. We have a series of hands-on exercises you can work on to gain actual experience building playbooks. Each of the exercise consist of

- A problem statement,

- Some sample data,

- A video with an instructor completing the exercise from start to finish, and

- The finished playbook in an exported format. You can download and import it to your deployment to compare with your own work.

-

Finally, the full online documentation at https://help.logichub.com

90-minute Product Walk-through

Self-study Training Guide

It is a 40-page guide that can be read start to finish to get a progressively deeper understanding of what you can do, and how to do it, with the platform. Download it here:

https://logichub-training.s3-us-west-2.amazonaws.com/study-guide/logichub-training-guide.pdf

Exercise #1: Simple Data Analysis -- Create a Playbook to Find the Top 10 Sources

What is the goal of the exercise?

Read SSH authentication logs from a file. Use the logs to identify the 10 sources that have failed login attempts.

Sample data file:

https://logichub-training.s3-us-west-2.amazonaws.com/sample-data/ssh.csv

Finished playbook:

Download here: simple_data_analysis.json

Walkthrough with an instructor (11 min):

Exercise #2: Using an Integration to Enrich Your Data

What is the goal of the exercise?

What is the goal of the exercise? Read a file containing some random IP addresses. And run ARIN WHOIS check (as an Integration) on them. Extract useful information from the results of the integration.

Sample data file:

https://logichub-training.s3-us-west-2.amazonaws.com/sample-data/random-ip-addresses.csv

Finished playbook:

Download here: using-an-integration.json

Walkthrough with an instructor (8 min):

Exercise #3: Run the Playbook Continuously -- Using Stream

What is the goal of the exercise?

With a playbook that filters and outputs some data, create a stream that runs it every 30 minutes.

Walkthrough with an instructor (4 min):

Exercise #4: Manipulating JSON to Extract Data

What is the goal of the exercise?

With a list of IP addresses, run VirusTotal check on each of them. Then extract useful information about those IP address from the JSON result from VirusTotal. Several important techniques in manipulating JSON are demonstrated.

Sample data file:

Sample data file: https://logichub-training.s3-us-west-2.amazonaws.com/sample-data/random-ip-addresses.csv

Finished playbook:

Download here: manipulating-json.json

Walkthrough with an instructor (26 min):

Exercise #5: Using Operators and Spark Functions

What is the goal of the exercise?

Walkthrough with Operators and Apache Spark function to perform few operations e.g., exploring custom lists, operating on strings etc.

Sample data file:

No sample data. The playbook will generate some data with operators.

Finished playbook:

Download here: using-operators-and-spark-functions.json

Walkthrough with an instructor (24 min):

Exercise #6: Joins

What is the goal of the exercise?

Demonstrate 3 different approaches of joining two tables, from using LogicHub operators to writing a SQL LEFT JOIN.

Sample data files:

Playbooks:

Starting playbook: joins-initial.json, and

Finished playbook: joins-complete.json

Preparation:

1. Import the starting playbook. Download joins-initial.json listed above and drag it onto the Playbooks page. After this, you will have a playbook named Joins Initial Playbook that have two nodes in it. They reference two custom lists.

2. Download joins-table-1.csv and joins-table-2.csv. These files contain the data to be added to the custom lists.

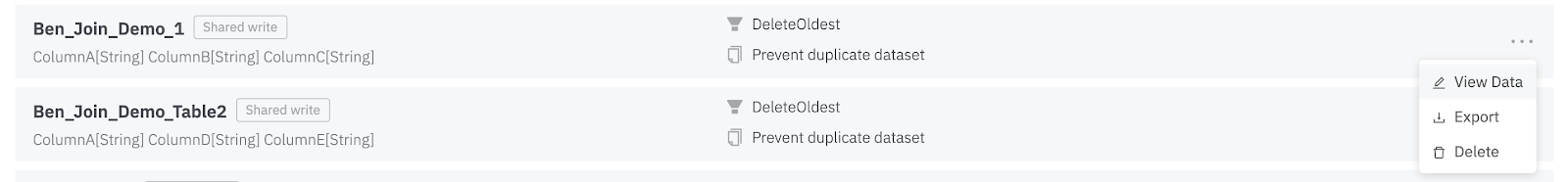

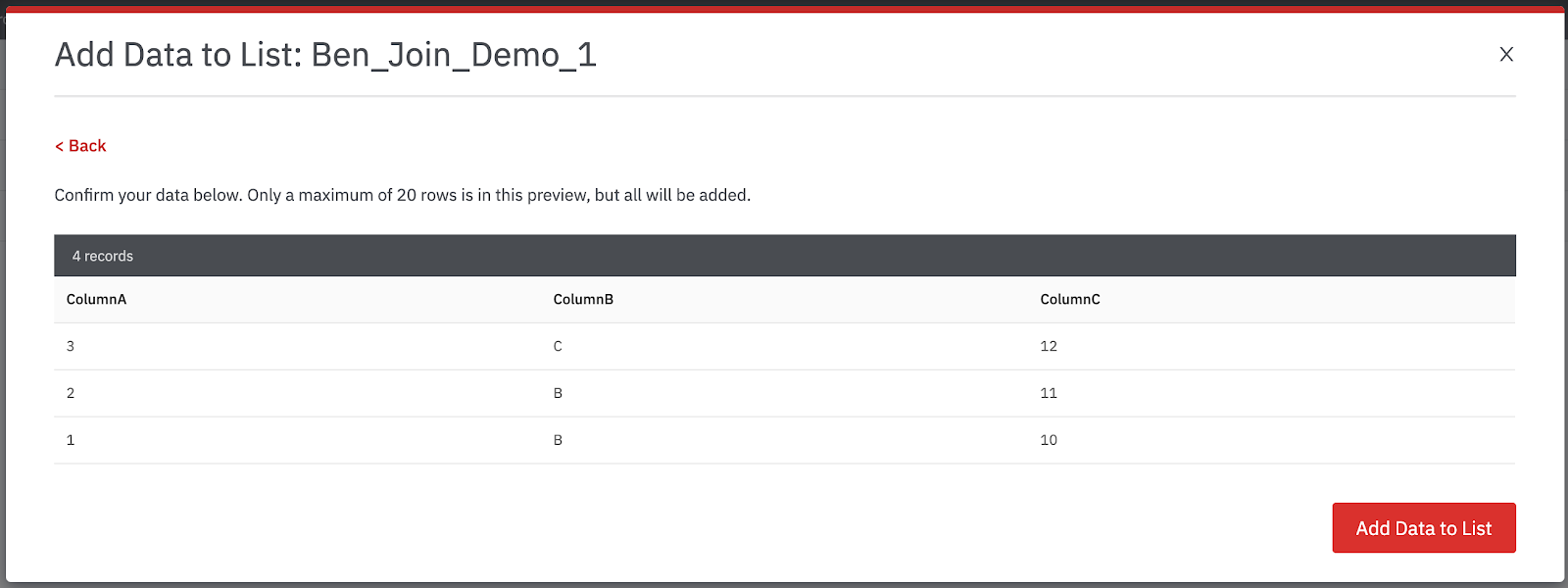

3. Populate the custom lists for the first 2 tables so you can perform the joins. To do this, you navigate to Data → Custom Lists. You will find two custom lists, Ben_Join_Demo_1 and Ben_Join_Demo_Table2. Click the 3-dot menu icon on the right hand side and choose View Data, as shown below:

4. Then click on the Add Data button.

5. For Ben_Join_Demo_1 custom list, drag-and-drop joins-table-1.csv and then click the button Add Data To List.

6. Repeat that for Ben_Join_Demo_Table2, using the joins-table-2.csv CSV file.

7. Then you are ready to start walking along with the video.

Walkthrough with an instructor (6 min):

Exercise #7: Basic Scoring

What is the goal of the exercise?

Read proxy logs and collect IP addresses where we are observing high/low data upload. We annotate them as High/Low Risk based on scores.

Sample data file:

https://logichub-training.s3-us-west-2.amazonaws.com/sample-data/proxy.csv

Finished playbook:

Download here: basic-scoring.json

Walkthrough with an instructor (14 min):

Exercise #8: Case Management

The video demonstrates how you can use the Case Management portion of the LogicHub application to manually create cases and work through the resolution of them. The next exercise will cover the creation of cases from an automation playbook.

LogicHub, Inc. © 2020